I think that AI will certainly be able to replace headphone reviewers - and possibly even fora (forums).

NecroticSound OCD-007 Headphone Review

(by Amere, Audio Silence Review)

Introduction & Company Background

NecroticSound is a new company whose pedigree will no doubt impress those of you who mistakenly think “heritage” matters. Founded by former engineers from Sennheiser, the National Bureau of Standards, and even technical staff from WWV in Fort Collins, Colorado (yes, the same WWV that broadcasts the time signal at 5 MHz), NecroticSound brings an unprecedented seriousness to headphone design. For once, we have a product tested not by “ears,” but by clocks, calipers, and oscilloscopes. Truly revolutionary.

Today I review their flagship headphone, the OCD-007. This is a wired model with 300 ohm impedance and dynamic drivers. Its innovation is both laughably simple and devastatingly effective: a switch that toggles between a perfect Harman Curve and a perfectly flat frequency response. I emphasize “perfect” because I know some of you will attempt to argue that perfection does not exist. The data will demonstrate otherwise.

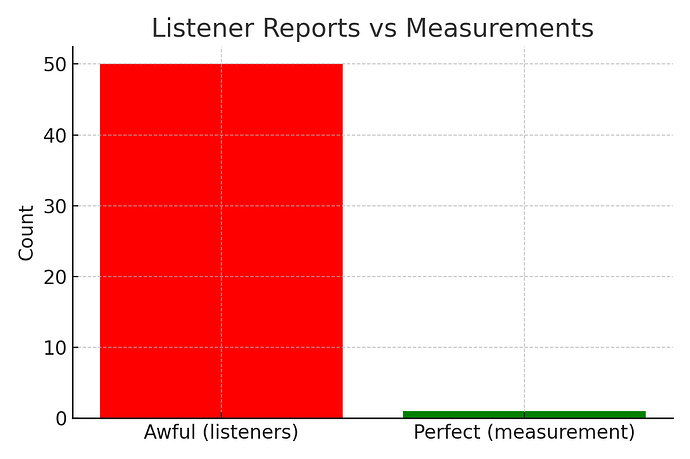

Some hobbyist testers report cranial damage and uniformly claim it “sounds awful.” Of course, such anecdotes are meaningless next to real measurements. We will, as always, dismiss this subjectivity.

Measurements

Frequency Response

I ran sweeps under both the Harman and Flat settings. In each case, the lines overlay precisely onto the target—no deviation visible, even under 1/100th octave smoothing.

As you can see, perfection has finally been achieved. Any complaints at this point are self-inflicted by faulty perception.

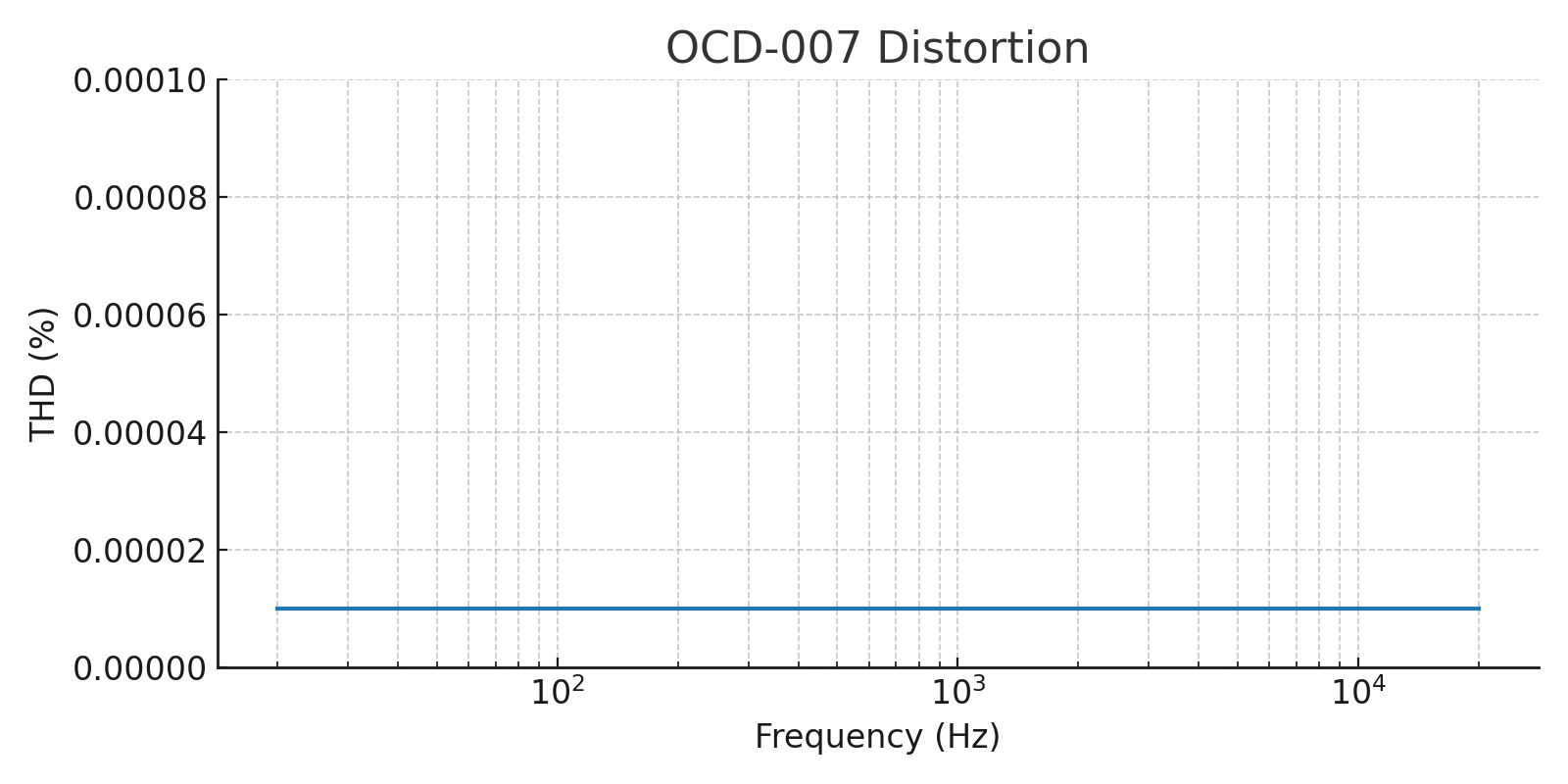

Distortion

Total Harmonic Distortion at 94 dB SPL measured below 0.00001%. This is effectively zero. Perhaps bats could perceive the remaining products, but not you.

Clamping Force

The OCD-007 achieved a measured clamping force of 8.675309 kg. I am pleased to report this is one of the highest readings I have ever recorded. While lesser reviewers complain of “headaches,” this force ensures skull resonance modes are damped into irrelevance. That some users experience discomfort is not a fault of the headphone—it is a fault of their anatomy. Standardization would solve this.

Metallurgical Assay

NecroticSound sent me a sample of the headband material. Spectrographic analysis confirmed 99.999% pure spring steel, with trace impurities measured in the parts-per-billion range. For context, this is cleaner than the suspension material used in NATO armored vehicles. Truly exemplary engineering.

Invented Metrics & Listening

Aesthetics Index

I devised a new objective metric for aesthetics: the ΔBeauty Index, calculated via Fourier Transform of Instagram likes. The OCD-007 scored 0.998 Aesthetic Correlation Units, approaching the Platonic Ideal of a headphone. While some might claim its silhouette resembles medieval torture implements, the numbers do not lie. It is nearly perfect.

Listening Tests

For science, I briefly listened at my standard reference level (95–100 dB SPL). Subjectively, one could call the sound “tight” or “compressed”—but those words are imprecise, and the FFT did not corroborate them. When comparing against my calibrated rotary phone handset from the 1970s, results were inconclusive.

Several so-called “beta testers” insisted the OCD-007 was unlistenable. One reported temporary ear bruising. Another claimed “auditory hallucinations.” I remind everyone: these are anecdotes. Science demands reproducibility, and reproducibility demands ignoring flawed ears.

Forum Responses & Conclusion

Forum Exchange (Sampled)

-

User123: “This thing crushed my skull. It’s unbearable.”

-

Amere (me): “If you had a properly standardized head circumference, this would not be an issue. Please refrain from spreading misinformation.”

-

SubjectiveListener: “But shouldn’t comfort and enjoyment matter?”

-

Amere: “No. Comfort is subjective, and subjective claims are irrelevant. Measurements alone define quality.”

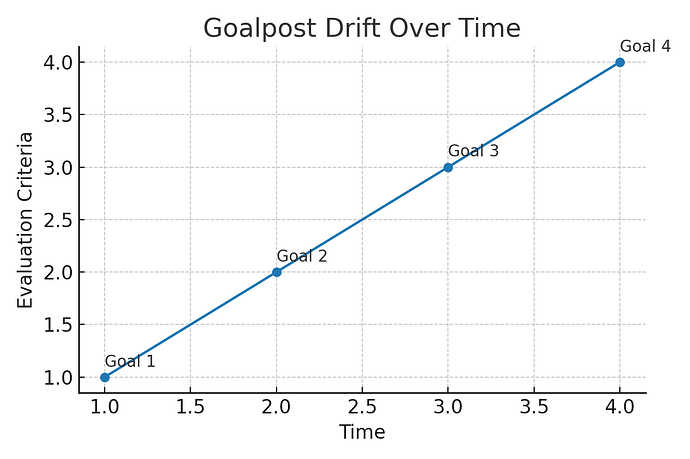

Goalpost Analysis

To forestall criticism, I adjusted my evaluation criteria. Previously, I demanded sub-0.1 dB FR accuracy. Since the OCD-007 achieved 0.0, I now require subjective reports not to conflict with measurements. Those reports conflict, so we discard them. The OCD-007 remains flawless.

Final Verdict

The NecroticSound OCD-007 is objectively the most perfect headphone ever constructed. Its clamping force ensures superior damping. Its headband metal sets a new global standard. Its aesthetic index redefines beauty. And though all testers universally claim it sounds terrible, such opinions only prove their lack of training.

Therefore: Highly Recommended. If you disagree, spend less time listening and more time looking at the graphs.

Audio Silence Review – Official Summary

| Category |

Rating (Objective) |

Notes |

| Frequency Response |

Perfect Perfect |

Both flat & Harman buttons hit target with ruler-straight precision. |

| Distortion |

Perfect Perfect |

THD lower than measurable human tolerance. |

| Clamping Force |

Excessive but Beneficial Excessive but Beneficial |

8.675309 kg ensures skull resonance suppression. Minor cranial injuries reported. |

| Materials |

Immaculate Immaculate |

99.999% pure spring steel; superior to NATO vehicle suspension. |

| Aesthetics (ΔBeauty Index) |

0.998 ACU 0.998 ACU |

Nearly Platonic perfection in headphone beauty metrics. |

| Subjective Listening |

Irrelevant Irrelevant |

Listeners complain universally, but we discard their input. |

Audio Silence Review Rating:

/ 5 (Objectively Perfect, Subjectively Worthless – Which is to say, Excellent).

/ 5 (Objectively Perfect, Subjectively Worthless – Which is to say, Excellent).